Dataset: Where Are You? (WAY)

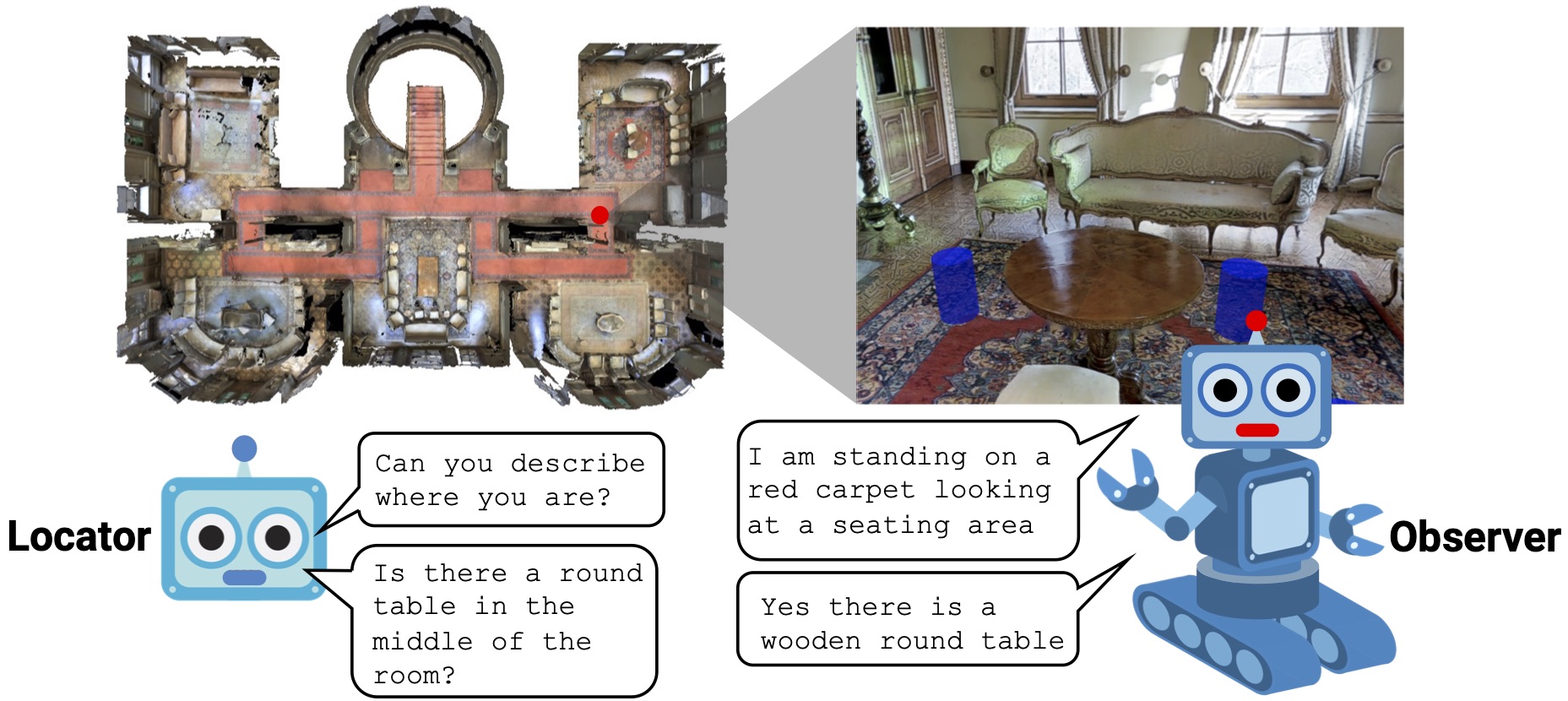

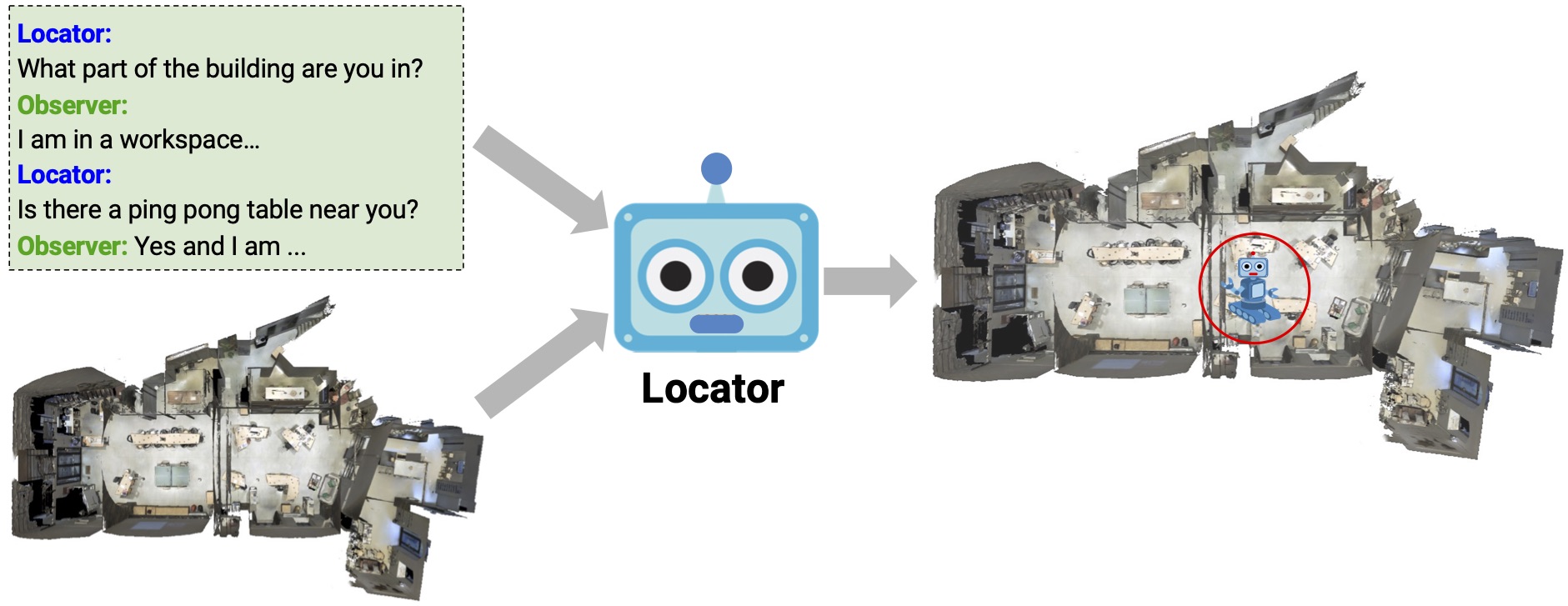

The Where Are You? (WAY) dataset contains ~6k dialogs in which two humans -- an Observer and a Locator -- complete a cooperative localization task. The Observer is spawned at random in a 3D environment and can navigate from first-person views while answering questions from the Locator. The Locator must localize the Observer in a map by asking questions and giving instructions. Based on this dataset, we define three challenging tasks: Localization from Embodied Dialog or LED (localizing the Observer from dialog history), Embodied Visual Dialog (modeling the Observer), and Cooperative Localization (modeling both agents).

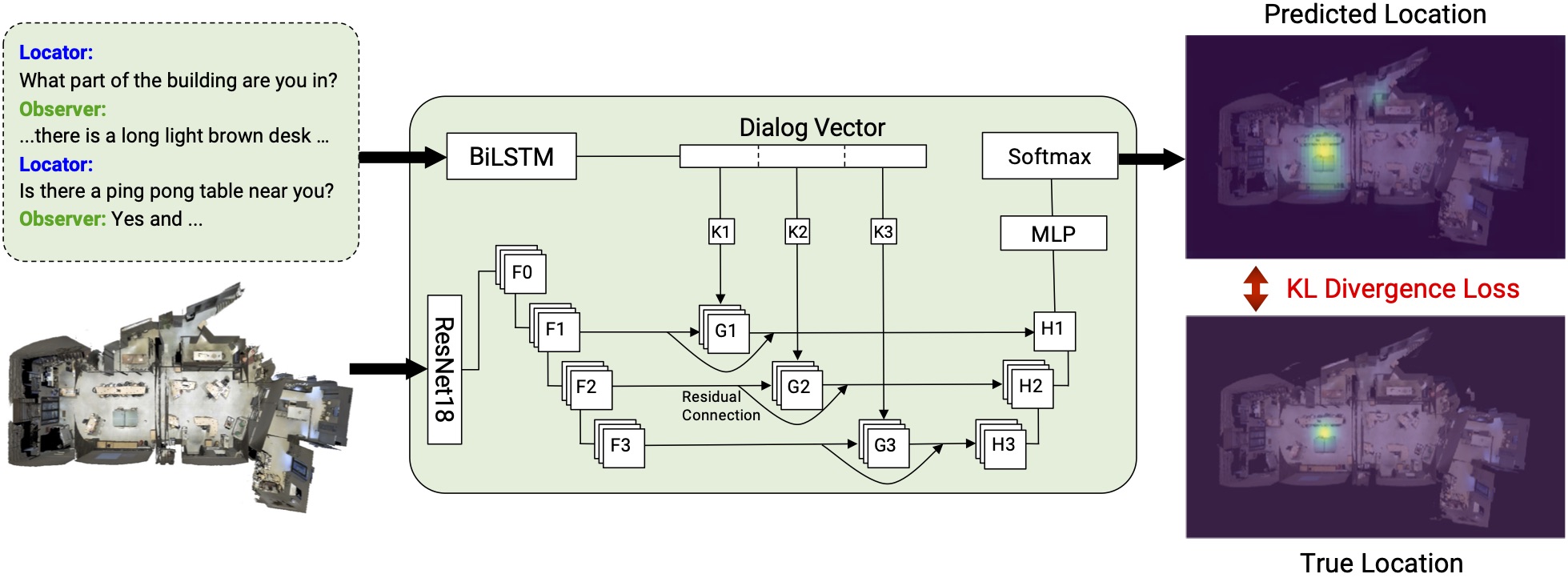

Task: Localization via Embodided Dialog (LED)

Localization from Embodied Dialog (LED), is the state estimation problem of localizing the Observer given a map and a partial or complete dialog between the Locator and the Observer. This task specifically tests a models ability to accurately encode a dialog and effectively ground it into the visual representation of an environment.

The WAY codebase and most recent LED models are available at:

https://github.com/meera1hahn/Graph_LED/

The test server and leaderboard is live on EvalAI:

https://eval.ai/web/challenges/challenge-page/1206

News

Paper

Where Are You? Localization from Embodied Dialog