About Me

I am a Research Scientist at Google Research in Atlanta. I recently completed my PhD in Computer Science at the Georgia Institute of Technology where I was advised by James M. Rehg. At Georgia Tech I also had the pleasure of working closely with Dhruv Batra and Devi Parikh. At Georgia Tech I have also collaborated with Peter Anderson and Stefan Lee. My research interests are primarily focused on multi-modal modeling of vision and natural language for applications in artificial intelligence. My long-term research goal is to develop multi-modal systems capable of supporting robotic or AR assistants that can seemlessly interact with humans. My research currently revolves around training embodied agents (in simulation) to perform complex semantic grounding tasks.

In the summer of 2020, I was an intern at FAIR working with Abhinav Gupta. In the summer of 2019, I was a research intern at Facebook Reality Labs (FRL) working with James Hillis and Dhruv Batra. In the summer of 2018, I was a research intern at NEC Labs working with Asim Kadav and Hans Peter Graf.

As an undergraduate at Emory University, I worked in a Natural Language Processing lab for two years under Dr. Jinho Choi. I also spent a summer working with Dr. Mubarak Shah at University of Central Florida.

Education

- Georgia Institute of Technology - Presidential PhD Fellowship (2016 - 2020)

- Ph.D in Computer Science, Georgia Institute of Technology, July 2022

- B.S. in Computer Science and Mathematics, Emory University, 2016

Publications

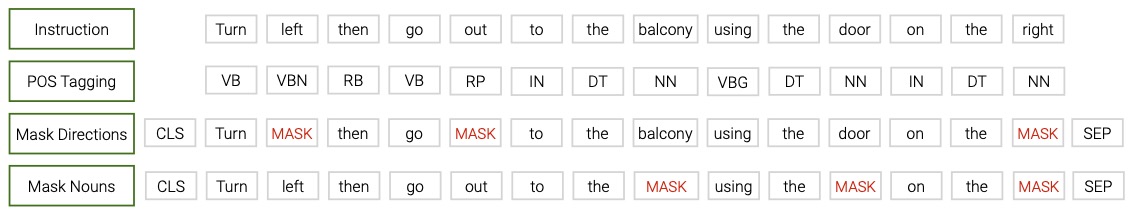

Which way is `right'?: Uncovering Limitations of Vision-and-Language Navigation Models

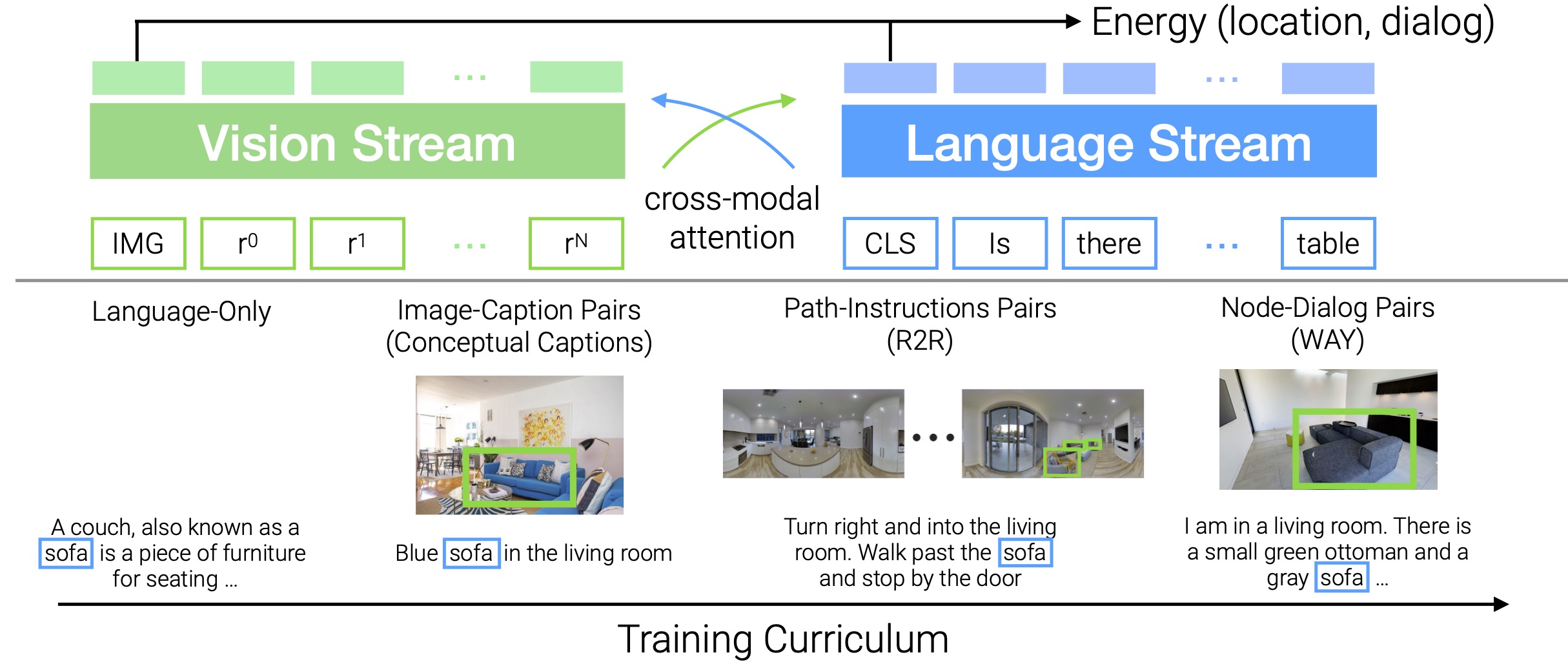

Transformer-based Localization from Embodied Dialog with Large-scale Pre-training

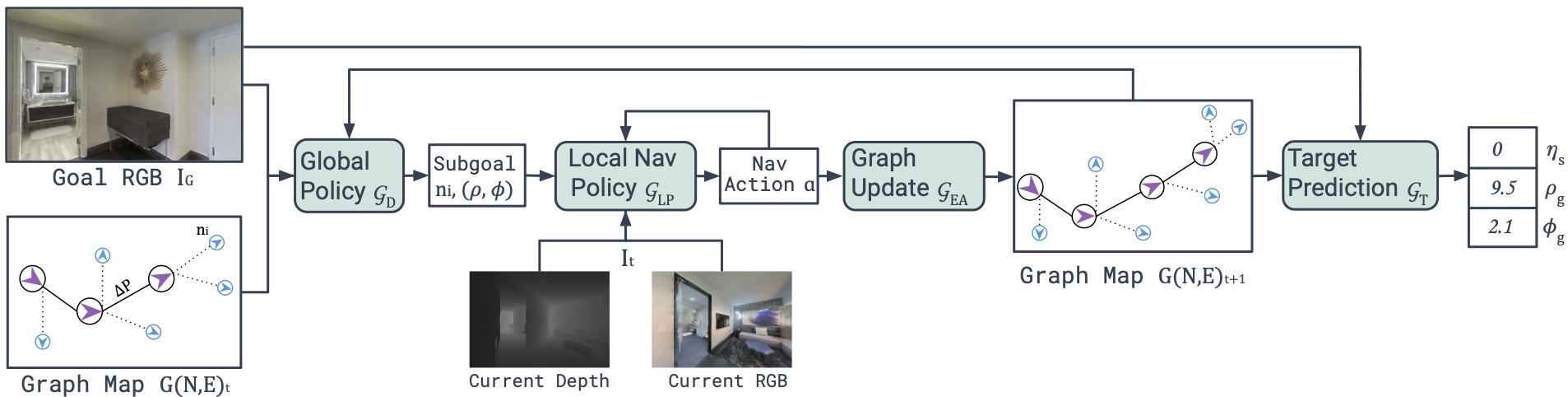

No RL, No Simulation: Learning to Navigate without Navigating

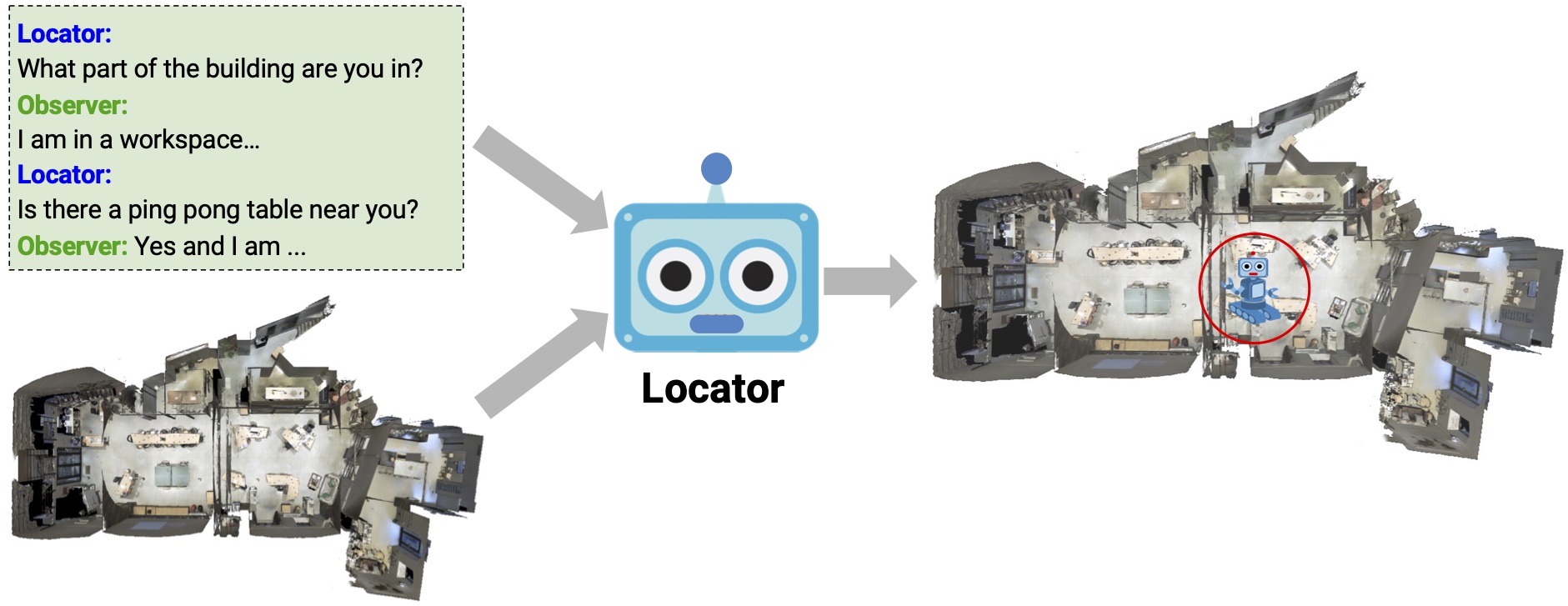

Where Are You? Localization from Embodied Dialog

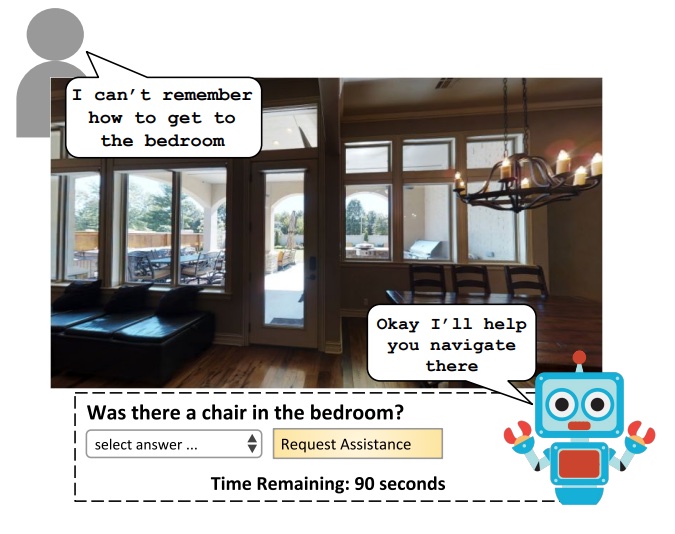

Learning a Visually Grounded Memory Assistant

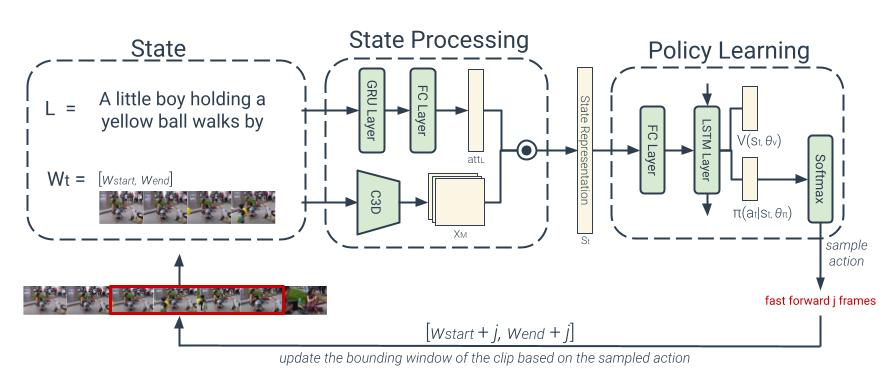

Tripping through time: Efficient Localization of Activities in Videos

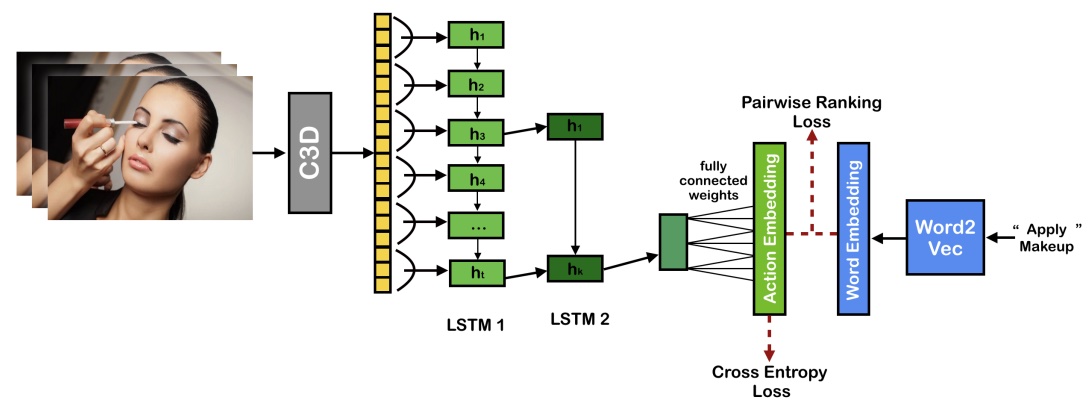

Action2Vec: A Crossmodal Embedding Approach to Action Learning

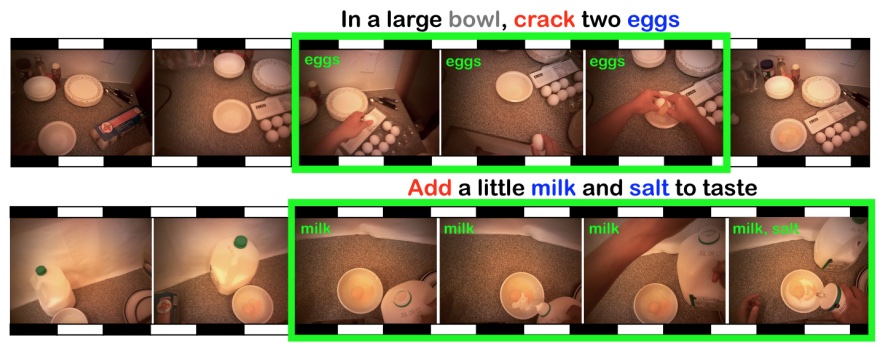

Localizing and Aligning Fine-Grained Actions to Sparse Instructions

Situated Bayesian Reasoning Framework for Robots Operating in Diverse Everyday Environments

Deep Tracking: Visual Tracking Using Deep Convolutional Networks

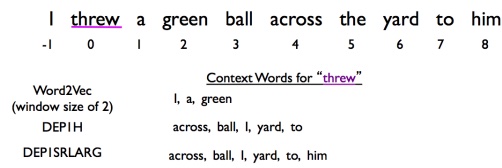

Advances in Methods and Evaluations for Distributional Semantic Models

Talks

No RL, No Simulation: Learning to Navigate without Navigating

Neurips 2021

Where Are You? Localization from Embodied Dialog

EMNLP 2020